Introduction to Artificial Intelligence

History and evolution of AI

Learning objectives:

- Gain basic knowledge of the historical milestones of AI.

Introduction

Early developments and milestones in the History and evolution of AI

The beginnings of artificial intelligence (AI) can be traced back to the 1950s when scientists first began working on creating machines that could exhibit intelligent behavior. Early developments and milestones in the field of AI include:

Turing Test

One of the pivotal figures in the early history of AI was Alan Turing, a British mathematician and computer scientist known as the father of modern computing. In 1950, he published a paper entitled "Computing Machinery and Intelligence." In this paper he proposed the concept of the "Turing Test," which is a measure of a machine's ability to display intelligent behavior equivalent to, or indistinguishable from, that of a human.

The test involves a human evaluator who engages in natural language conversations with another human and a machine and must determine which is the machine. If the evaluator cannot distinguish the machine from the human, the device is said to have passed the Turing Test.

Still in use today, this test involves a human evaluator interacting with a machine and a human participant and determining which based on their responses.

Artificial Neural Networks

Warren McCulloch and Walter Pitts published a paper in 1943 proposing the concept of artificial neural networks inspired by the structure and function of the human brain. These networks consist of interconnected "neurons" that can process and transmit information and are capable of learning through experience.

Norbert Wiener, also published a book in 1948 called "Cybernetics" that outlined the principles of feedback and control in living organisms and machines.

General Problem Solver

In 1957, Herbert Simon and Allen Newell developed the General Problem Solver (GPS), one of the first AI programs to solve a wide range of problems using a combination of search and logical reasoning.

GPS solved problems such as the "Missionaries and Cannibals" puzzle, which involves finding a way to safely transport a group of missionaries and cannibals across a river using a boat that can hold only two people at a time.

ELIZA

In 1966, Joseph Weizenbaum developed ELIZA, a program that could hold conversations with human users using a set of rules and a script.

ELIZA was able to understand and respond to simple user queries and statements and was one of the first AI programs to demonstrate natural language processing capabilities.

Expert Systems

In the 1970s and 1980s, researchers developed the concept of "expert systems," which are AI programs designed to mimic the decision-making abilities of a human expert in a distinct field.

Expert systems could analyze data and make recommendations or decisions based on their knowledge and experience.

Deep Blue

In 1997, the computer chess program Deep Blue made history by defeating world chess champion Garry Kasparov in a six-game match.

Deep Blue was able to analyze and evaluate hundreds of thousands of chess positions per second and make strategic decisions based on its analysis.

These early developments and milestones in the history of AI laid the foundation for various advances made in the field in recent years. They paved the way for developing intelligent systems performing multiple tasks and functions.

The Birth of Artificial Intelligence

Pre-20th century roots of AI

The origins of artificial intelligence (AI) can be delineated back to ancient civilizations, but the modern field of AI began to take shape in the mid-20th century. Before that, several key developments laid the foundation for the field.

One of the earliest known examples of AI is the myth of Pygmalion, a sculptor in ancient Greek mythology who created a statue of a woman so realistic that it came to life. This myth highlights the idea of creating artificial beings that can mimic human behavior and intelligence.

Advances in mathematics and logic laid the foundation in the 17th and 18th hundreds for the development of AI. In particular, the work of mathematician and philosopher Gottfried Leibniz on symbolic logic and the development of the calculus of reasoning influenced later AI researchers.

Overall, the pre-20th century roots of AI can be traced back to ancient mythology and the development of mathematics, logic, and psychology, and were further advanced by the pioneering work of Turing and other early AI researchers. These foundations laid the groundwork for developing the modern field of AI and continue to influence research today.

Early concepts and theories of artificial intelligence

Artificial intelligence has been around for centuries, with early thinkers such as Ada Lovelace and Alan Turing considering the possibility of creating intelligent machines. However, it was only in the mid-20th century that significant progress was made in the development of AI.

One of the early concepts in AI was the idea of artificial neural networks, which were inspired by the structure and operation of the human brain. Neural networks are composed of interconnected nodes, or "neurons," that process and transmit information. They can learn by adjusting the strength of the connections between neurons based on experience, allowing them to recognize patterns and make decisions based on that recognition.

Another early concept in AI was the idea of expert systems, which are computer programs that simulate the decision-making abilities of a human expert in a particular field. Expert systems use a set of rules and a knowledge base to make decisions and solve problems. They are typically used in domains with a lot of specialized knowledge, such as medicine or engineering.

Another essential concept in AI is the idea of machine learning, which is the ability of a machine to improve its performance on a task through experience. Machine learning algorithms are developed to analyze data and improve their performance over time by adjusting their internal parameters.

There are several types of machine learning, including supervised learning, in which the machine is trained on labeled data (data with correct 'answers'), and unsupervised learning, in which the machine must learn to recognize patterns in data on its own.

Another key concept in AI is natural language processing (NLP), which is the ability of a machine to understand and generate human-like language. NLP has many applications, including language translation, chatbots, and voice recognition systems.

Overall, the early concepts and theories of artificial intelligence have laid the foundation for many advancements in the field we see today. These concepts and ideas continue to be refined and developed, leading to new and exciting developments in AI.

The Turing Test and the idea of machine intelligence

The Turing Test measures a machine's ability to exhibit intelligent behavior indistinguishable from a human's. It is named after Alan Turing, a British mathematician and computer scientist who proposed it in 1950.

The Turing Test involves a human evaluator engaging in a natural language conversation with a human participant and a machine. The evaluator is not told which is the machine and which is the human and must decide based on the conversation alone. If the evaluator cannot distinguish the machine from the human with a high degree of accuracy, the machine has passed the Turing Test.

The idea behind the Turing Test is that if a machine can engage in a discussion that is indiscernible from that of a human, then it can be said to have a certain level of intelligence. However, we should note that the Turing Test is not a comprehensive measure of intelligence, as it only focuses on one aspect (i.e., the ability to converse in natural language).

There are some criticisms of the Turing Test as a measure of machine intelligence. For example, some argue that it is too narrow in scope, as it does not consider other aspects of intelligence, such as problem-solving, creativity, or learning ability. Others argue that it is too reliant on the subjectivity of the evaluator, as different evaluators may have different standards for what constitutes "human-like" behavior.

Despite these criticisms, the Turing Test remains widely recognized and influential in artificial intelligence and is often used as a benchmark for developing intelligent systems

The Rise of Artificial Intelligence

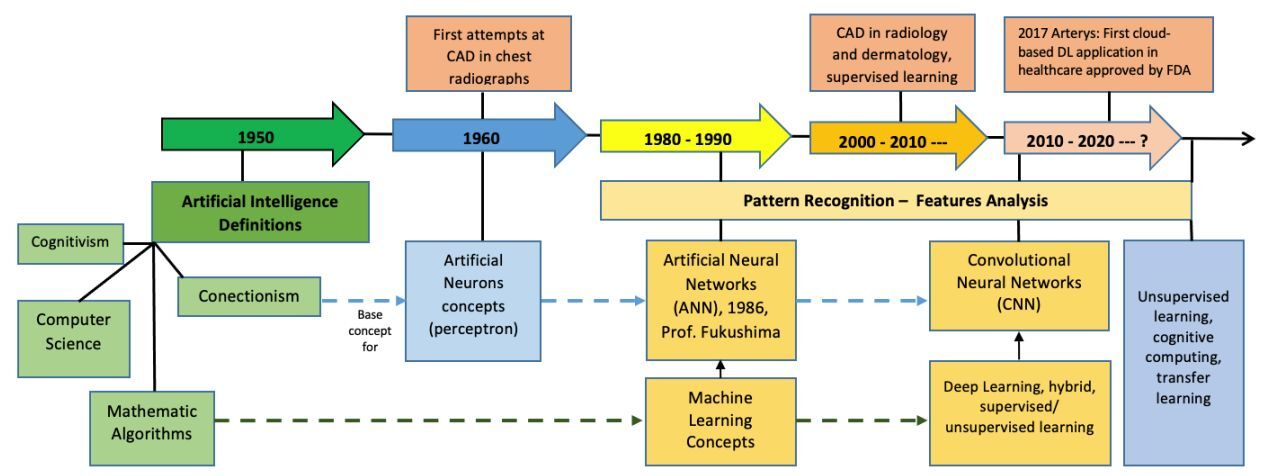

Figure 1. Evolution of artificial intelligence. The development of the concepts machine learning and deep learning within medicine.

Image credit: Castillo, Darwin, Vasudevan Lakshminarayanan, and María José Rodríguez-Álvarez. 2021. (CC BY 4.0)

The development of artificial neural networks and machine learning

Artificial neural networks (ANNs) have been around for decades and have evolved significantly over time. Here we provide an overview of the development of artificial neural networks and machine learning, including the key milestones, concepts, and techniques that have shaped the field.

The idea of artificial neural networks can be traced back to the 1940s when Warren McCulloch and Walter Pitts proposed a computational model of the human brain that could process information and make decisions based on that processing. They suggested that the brain's neurons, or nerve cells, were connected by synapses and that these connections could be used to transmit and process information.

They also suggested that the strength of these connections could be adjusted based on the input received, allowing the brain to learn and adapt to new situations.

The Perceptron

In the 1950s and 1960s, scientists developed more formal models of artificial neural networks based on this idea.

One of the first and most compelling models was the Perceptron, created by Frank Rosenblatt. The Perceptron was a simple neural network consisting of a single layer of artificial neurons, or units, that could be trained to recognize patterns in input data (Figure 1).

The Perceptron was able to learn by adjusting the strength of its connections based on the input it received.

While the Perceptron was a significant development, it had some limitations. It could only recognize patterns in linearly separable data, meaning that it could not classify patterns that were not separated in the input space.

In addition, the Perceptron could only learn to recognize a single pattern at a time and could not learn more complex patterns that might involve multiple features or dimensions.

To address these limitations, researchers began to develop more sophisticated neural network architectures that could learn more complex patterns.

Multilayer perceptron (MLP)

One of the fundamental developments in this area was the multilayer perceptron, or MLP, which added additional layers of artificial neurons to the Perceptron model. MLPs could learn more complex patterns by using multiple layers of neurons to process the input data, each layer learning to recognize a different aspect of the pattern.

Another significant development in artificial neural networks was the concept of backpropagation, which was introduced in the 1980s.

Backpropagation

Backpropagation is an algorithm that neural networks use to adjust the strength of their connections based on the error between the network's predictions and the actual target values, allowing the network to learn from its mistakes and improve its performance over time.

In the 1990s and early 2000s, advances in computer hardware and software made it possible to train more extensive and complex neural networks, leading to significant improvements in the performance of these systems.

The 2000s - new techniques

In addition, researchers developed new techniques for training neural networks, for example, convolutional neural networks (CNNs) and recurrent neural networks (RNNs), which are well-suited for processing data with a spatial or temporal structure, respectively.

In recent years, artificial neural networks and machine learning have become increasingly popular and widely used in diverse applications, including image and speech recognition, natural language processing, and autonomous systems.

These technologies have the potential to revolutionize most industries and have already had a significant impact on fields such as healthcare, finance, and transportation.

Artificial neural networks and machine learning development have been a long and ongoing process with many key milestones and contributions. These technologies have the potential to transform how we interact with the world and make decisions, and they will likely continue to evolve and advance in the coming years.

The birth of artificial intelligence research organizations and institutions

The birth of artificial intelligence (AI) research organizations and institutions can be traced back to the early days of AI itself. AI research has its roots in the 1950s and 1960s, with the development of early computer systems and the concept of artificial intelligence.

One of the earliest AI research organizations was the Artificial Intelligence Center (AIC), established at the SRI International (formerly the Stanford Research Institute) in the 1960s. The AIC was founded by John McCarthy, one of the pioneers of AI research, and was supported by the US Department of Defense. The AIC focused on a wide range of AI research topics, including natural language processing, robotics, and machine learning.

Another early AI research organization was the Massachusetts Institute of Technology's (MIT) Artificial Intelligence Laboratory, founded in 1959. The AI Lab was initially funded by the Defense Advanced Research Projects Agency (DARPA) and focused on natural language processing, machine learning, and robotics research.

In the 1980s and 1990s, research in artificial intelligence began to expand and diversify, creating several new AI research organizations and institutions. These included the Artificial Intelligence Institute at Carnegie Mellon University, the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT and the Institute for Advanced Study's Computer Science Department.

Today, AI research is conducted by various organizations and institutions, including universities, government agencies, and private companies. These organizations and institutions often collaborate and share resources to advance the field of AI and its practical applications.

Key developments in the fields of robotics and natural language processing

Robotics and natural language processing are two fields that have seen significant developments in recent years.

In robotics, some key developments include:

Autonomous vehicles

These vehicles can navigate and operate without human intervention, including self-driving cars, drones, and other robots. These systems use a combination of sensors, such as lidar, cameras, and algorithms, to make decisions about their surroundings and how to navigate them.

Soft robotics

These robots are made from flexible materials, such as silicone or rubber, rather than rigid materials, like metal or plastic, allowing them to be more adaptable and capable of handling a more comprehensive range of tasks.

Collaborative robots

These are robots that are designed to work alongside humans rather than replace them, including robots that are used in manufacturing, logistics, and other industries to assist with tasks such as material handling and assembly.

Humanoid robots

These are robots that are designed to look and behave like humans. They are often used for tasks requiring high skill or a human-like presence, such as customer service or entertainment.

Natural language processing (NLP) is a subfield of artificial intelligence that dealing with the interaction between computers and human (natural) languages. It involves developing algorithms and models to understand, generate, and analyze human language. NLP has been an active research area for several decades and has made significant progress in recent years. NLP enables robots to understand and respond to human commands and instructions in robotics.

In natural language processing, some key developments in NLP include:

Natural Language Generation

This involves developing algorithms to generate human-like text or speech based on a given set of rules or data. In robotics, natural language generation can generate spoken or written responses to user queries or commands, making it easier for humans to interact with robots.

Natural Language Understanding

This involves developing algorithms to understand and interpret human language. In robotics, natural language understanding can enable robots to interpret and execute commands or instructions given by humans.

Dialogue Systems

These systems enable robots to converse with humans using natural language. Dialogue systems can perform tasks such as answering questions, providing information, or assisting with tasks. These systems are used in various applications, such as customer service chatbots or virtual assistants like Siri or Alexa.

Text-to-Speech and Speech-to-Text

These techniques enable robots to convert written text to speech and speech to text, respectively, useful for communicating with humans through spoken language.

Text generation

This is the ability of a machine to generate coherent and meaningful text based on a given prompt or topic. Text generation has been made possible by developing advanced language models, such as GPT-3, that can generate human-like text.

Sentiment analysis

Sentiment analysis is a machine's ability to understand and analyze the sentiment or emotion expressed in text or speech. In robotics, sentiment analysis can gauge a user's emotional response to a robot's actions or responses. The analysis is also helpful for tasks such as customer service, where it can be used to identify and respond to customer complaints or feedback.

Machine translation

This is the ability of a machine to translate text or speech from one language to another. Machine translation has become increasingly accurate and widely used in recent years, thanks to the development of deep learning algorithms and the availability of extensive data for training. Machine translation can enable robots to communicate with users in different languages

*

These are a few key developments in robotics and natural language processing. Both areas are rapidly evolving and will likely continue to see significant progress in the future. Overall, the development of NLP techniques has enabled robots to better understand and communicate with humans, making it easier for humans to interact with and use robots for various tasks.

*

The Golden Age of Artificial Intelligence

The development of expert systems and decision-making algorithms

Expert systems

Expert systems, also known as knowledge-based systems, are computer programs designed to mimic a human expert's decision-making ability in a specific domain. They are based on the idea of artificial intelligence, which aims to replicate the cognitive abilities of humans in machines.

Expert systems were developed in the 1970s and have since evolved significantly, leading to the development of various types of decision-making algorithms.

An expert system has three main components:

- the knowledge base

- inference engine

- user interface

The knowledge base consists of a collection of facts and rules about a particular domain used by the inference engine to make decisions.

The inference engine represents the "brain" of the expert system and uses logic and reasoning to draw conclusions based on the knowledge base. The user interface is how the expert system communicates with the user, and it can be a graphical user interface (GUI) or a command-line interface (CLI).

Expert systems have been applied in many fields, including medicine, finance, and engineering, to assist human experts in making decisions and solving problems. They can process large amounts of data, perform complex calculations, and provide recommendations based on their knowledge base.

Decision-making algorithms

There are several types of decision-making algorithms that can be used in expert systems, including rule-based approaches, decision trees, and neural networks.

Rule-based systems are based on a set of "if-then" rules used to make decisions. For example, a rule-based expert system for diagnosing a medical condition might have the following rule: "If the patient has a fever and a rash, then the condition is likely measles." The inference engine would use this rule and other rules in the knowledge base to make a diagnosis based on the patient's symptoms.

Decision trees are another type of decision-making algorithm that is often used in expert systems. They consist of a series of nodes and branches, each representing a decision point and each representing a possible outcome. The inference engine follows the branches of the tree based on the input data and ultimately arrives at a conclusion at the end of the tree.

Neural networks can be used in expert systems to make decisions based on large amounts of data, such as in image recognition or natural language processing.

In summary, expert systems are computer programs that mimic the decision-making ability of a human expert in a specific domain. They use various decision-making algorithms, such as rule-based systems, decision trees, and neural networks, to make decisions based on their knowledge base.

The emergence of AI in industry and business

Artificial intelligence (AI) has become increasingly important in industry and business in recent years. The development of AI technologies has opened up new possibilities for automating tasks, improving efficiency, and making better decisions.

AI has a long history, with early research dating back to the 1950s. However, it was not until the 21st century that AI began to see widespread adoption in industry and business, due to several factors, including:

Advances in computer hardware and software

AI algorithms require significant computational resources to function effectively. In the past, computers were not powerful enough to support these algorithms.

However, as computer hardware and software have become more advanced, it has become possible to build and deploy AI systems that can handle large amounts of data and perform complex tasks.

Availability of data

AI algorithms require large amounts of data to learn and improve. The explosion of data available through the internet and other sources has made it possible to feed AI systems with the data they need to learn and improve.

Development of new AI techniques

Researchers have developed a wide range of AI techniques over the years, including machine learning, natural language processing, and computer vision.

These techniques have made it possible to build AI systems that can perform various tasks, including understanding and interpreting language, analyzing images and video, and making predictions and decisions.

AI is being used in many different industries and business applications. Some examples include:

- Manufacturing:

- AI can optimize production processes, improve quality control, and predict equipment failures.

- Healthcare:

- AI can analyze medical records, assist with diagnosis and treatment, and identify patterns that may indicate potential health risks.

- Finance:

- AI can identify fraudulent activity, assess credit risk, and make investment decisions.

- Retail:

- AI can personalize recommendations, optimize pricing and inventory, and improve customer service.

Overall, the adoption of AI in industry and business has been driven by the desire to improve efficiency, reduce costs, and make better decisions. As AI technologies continue to advance, we will likely see even more widespread adoption of AI in the coming years.

The rise of AI-powered personal assistants and virtual assistants

AI-powered personal and virtual assistants are computer programs that assist users with tasks, answer questions, and provide information on various topics. These assistants use artificial intelligence (AI) and natural language processing (NLP) to understand and respond to user requests in a human-like manner.

The rise of AI-powered personal and virtual assistants can be traced back to the development of early AI technologies in the 1950s and 1960s. However, it was only with the advent of the internet and the proliferation of smartphones and other personal devices in the 2000s that these assistants became widely available and useful to the general public.

One of the first widely used AI-powered personal assistants was Apple's Siri, which was introduced as a feature of the iPhone 4S in 2011. Siri uses NLP to understand user requests and respond through text or speech. Other tech companies, such as Google and Amazon, followed suit by developing their own AI-powered personal assistants, including Google Assistant and Alexa

These assistants have become increasingly popular in recent years due to their ability to perform various tasks, including setting reminders, sending texts and emails, making phone calls, and providing information on weather, traffic, and news. They can also be integrated with various smart home devices, allowing users to control their homes using voice commands.

In addition to personal assistants, virtual assistants have also become increasingly popular in the business world. These assistants often accessed through chatbots or messaging apps, are designed to assist employees with scheduling meetings, booking travel, and accessing company information.

The rise of AI-powered personal and virtual assistants can be attributed to the increasing accessibility and versatility of AI technologies and the convenience and efficiency they provide to users. These assistants are expected to evolve and become more widely used.

The potential risks and benefits of AI

Artificial intelligence (AI) has the potential to bring many benefits to society, but it also carries with it certain risks and potential negative consequences.

Benefits of AI

Increased Efficiency: AI can automate specific tasks, allowing humans to be more productive and efficient. For example, AI can analyze data, perform complex calculations, and carry out repetitive tasks quickly and accurately.

Improved Decision-Making:AI can assist decision-making by providing insights and recommendations based on data analysis, leading to better, more informed healthcare, finance, and transportation decision-making.

Enhanced Customer Experience:AI can improve customer service by providing personalized recommendations and assistance to customers through chatbots and virtual assistants.

Increased Access to Information:AI can process and analyze large amounts of data, making it easier for individuals and organizations to access and understand information.

New Job Opportunities:As AI automates certain tasks, it may create new job opportunities in data science, machine learning, and AI development.

Risks and Negative Consequences of AI

Unemployment:As AI automates tasks, it may lead to job displacement and unemployment, particularly for low-skilled workers.

Bias:AI systems can reflect the biases of the data they are trained on and the individuals who design them, leading to unfair treatment of particular groups.

Security and Privacy:AI systems can be vulnerable to hacking and data breaches, leading to concerns about security and privacy.

Loss of Control:As AI becomes more advanced, there is a risk that it could surpass human intelligence and potentially pose a threat to humanity if it is not programmed with appropriate controls.

Ethical Concerns:As AI makes decisions and takes action, it raises ethical concerns about accountability and responsibility.

It is important to consider both AI's potential benefits and risks as it continues to develop and be integrated into society.

The Future of Artificial Intelligence

Predictions and projections for the future of AI

Artificial intelligence (AI) has made splendid strides in recent years, and many experts predict that it will continue to advance rapidly in the coming years.

There are a wide variety of predictions and projections for the future of AI, ranging from optimistic to dystopian. Some experts believe that AI will bring about significant technological and social changes, while others are more cautious in their predictions.

One common prediction is that AI will become increasingly integrated into everyday life. The integration could include using AI in transportation, healthcare, education, and many other fields. Some experts predict that AI will revolutionize these industries, making them more efficient, practical, and accessible to a broader range of people.

Other experts, however, caution that integrating AI into these fields could lead to job displacement and other social changes that must be carefully managed.

Another prediction is that AI will become more intelligent and autonomous over time. Some experts believe that AI will eventually surpass human intelligence, potentially leading to a technological singularity. Surpassing human intelligence could have significant consequences, both positive and negative, for society. For example, AI might solve complex problems that humans cannot, or it might be able to automate many tasks currently performed by humans.

On the other hand, there is also the potential for AI to pose a threat to humans, either intentionally or unintentionally.

There are also predictions about the potential ethical and moral implications of AI. Some experts believe that AI could be used to promote social good, for example, by helping solve environmental problems or promote global peace and understanding. Others are concerned about the potential for AI to be used to perpetuate injustice or undermine human autonomy.

The future of AI is difficult to predict with certainty. However, it is clear that AI will continue to have a decisive role in shaping the world in the coming years and that it will be necessary for society to consider the ethical and moral implications of these developments carefully.

The potential impact of AI on jobs and the economy

The potential impact of AI on jobs and the economy is a topic of much debate and speculation. Some experts believe that AI could lead to widespread job displacement as machines and algorithms can perform tasks that humans currently do. And lead to widespread unemployment, as people cannot find work in a world where many jobs have been automated.

Additionally, some specialists believe that AI could create new jobs and industries as people find new ways to improve their lives and businesses. For example, AI could automate mundane tasks, freeing people to focus on more creative and rewarding work. It could also be used to develop new products and services, creating new markets and opportunities for employment.

In general, the impact of AI on jobs and the economy is likely to be complex and multifaceted. It will depend on how quickly AI develops, how it is used, and how governments and businesses take to prepare for and manage the transition.

Individuals, organizations, and society must consider AI's potential impacts and take steps to ensure its benefits are distributed fairly and equitably.

Ethical considerations and challenges in the future of AI.

Several ethical considerations and challenges will likely arise as artificial intelligence (AI) advances and become more prevalent in society. Some of these include:

- Bias and discrimination:

- AI systems can be biased in decision-making if they are trained on biased data and can thus result in discrimination against certain groups of people, such as those belonging to minority ethnicities or genders.

- Privacy and security:

- AI systems often handle large amounts of sensitive personal data, raising concerns about privacy and protecting that data. There is also the risk that AI systems could be used for malicious intentions, for example, cyber-attacks or spreading misinformation.

- Unemployment:

- As AI systems become more advanced, there is a risk that they could automate jobs currently being performed by humans, leading to widespread unemployment.

- Lack of accountability:

- It can be challenging to determine who is responsible when an AI system makes a mistake or causes harm. This lack of accountability can make it challenging to hold anyone accountable for the actions of an AI system.

- Lack of transparency:

- Some AI systems, particularly those using deep learning techniques, can be difficult to understand and interpret, making it challenging to know how they reach their decisions. This lack of transparency can make it challenging to trust and regulate these systems.

- Ethical decision-making:

- I systems will likely be used to make important decisions affecting people's lives, such as in the criminal justice system or healthcare. It is essential to consider how these systems will be programmed to make ethical decisions and ensure they are aligned with human values.

These are just a few ethical considerations and challenges that will need to be addressed as AI advances. It will be necessary for researchers, policymakers, and society to carefully consider these issues and work toward solutions that ensure that AI is developed and used ethically.

*

References and further reading

Castillo D, Lakshminarayanan V, Rodríguez-Álvarez MJ. MR Images, Brain Lesions, and Deep Learning. Applied Sciences. 2021; 11(4):1675. https://doi.org/10.3390/app11041675

Fourcade A, Khonsari RH. Deep learning in medical image analysis: A third eye for doctors. J Stomatol Oral Maxillofac Surg. 2019 Sep;120(4):279-288. DOI: 10.1016/j.jormas.2019.06.002. Epub 2019 Jun 26. PMID: 31254638.

Kaul V, Enslin S, Gross SA. History of artificial intelligence in medicine. Gastrointest Endosc. 2020 Oct;92(4):807-812. DOI: 10.1016/j.gie.2020.06.040. Epub 2020 Jun 18. PMID: 32565184.

Is Artificial Intelligence Going to Replace Dermatologists? Available online: https://www.mdedge.com/dermatology/article/

Mintz Y, Brodie R. Introduction to artificial intelligence in medicine. Minim Invasive Ther Allied Technol. 2019 Apr;28(2):73-81. DOI: 10.1080/13645706.2019.1575882. Epub 2019 Feb 27. PMID: 30810430.

McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the dartmouth summer research project on artificial intelligence, August 31, 1955. AI Mag. 2006, 27. Google Scholar

Kenneth Mark Colby, Franklin Dennis Hilf, Sylvia Weber, Helena C Kraemer. Turing-like indistinguishability tests for the validation of a computer simulation of paranoid processes. Artificial Intelligence Volume 3, 1972, Pages 199-221. DOI: 10.1016/0004-3702(72)90049-5

Feigenbaum, E.A. (2003). Some challenges and grand challenges for computational intelligence. J. ACM, 50, 32-40. DOI: 10.1145/602382.602400

Epstein Robert, Roberts Gary, Beber Grace. Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer, ISBN 978-1-4020-9624-2. Springer Science+Business Media B.V., 2009. DOI: 10.1007/978-1-4020-6710-5

Joseph Weizenbaum. ELIZA — a computer program for the study of natural language communication between man and machine. Communications of the ACM Volume 26Issue 1Jan. 1983 pp 23-28. DOI: 10.1145/357980.357991

A. M. TURING, I. Computing Machinery and Intelligence, Mind, Volume LIX, Issue 236, October 1950, Pages 433-460. DOI: 10.1093/mind/LIX.236.433

Traiger, S. Making the Right Identification in the Turing Test1. Minds and Machines 10, 561-572 (2000). DOI: 10.1023/A:1011254505902

Sterrett, S.G. Turing's Two Tests for Intelligence*. Minds and Machines 10, 541-559 (2000). DOI: 10.1023/A:1011242120015

Rosenblatt, F. (1958). The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 65(6), 386-408. DOI: 10.1037/h0042519

Michael Collins. 2002. Discriminative Training Methods for Hidden Markov Models: Theory and Experiments with Perceptron Algorithms. In Proceedings of the 2002 Conference on Empirical Methods in Natural Language Processing (EMNLP 2002), pages 1-8. Association for Computational Linguistics. DOI: 10.3115/1118693.1118694

Mohri, M., & Rostamizadeh, A. (2013). Perceptron Mistake Bounds. arXiv. DOI: 10.48550/arXiv.1305.0208

S. I. Gallant, "Perceptron-based learning algorithms," in IEEE Transactions on Neural Networks, vol. 1, no. 2, pp. 179-191, June 1990. DOI: 10.1109/72.80230

Trevor Hastie, Robert Tibshirani, Jerome Friedman. The Elements of Statistical Learning, Data Mining, Inference, and Prediction, Second Edition, Springer New York, NY, 26 August 2009. DOI: 10.1007/978-0-387-84858-7

P. D. Wasserman and T. Schwartz, "Neural networks. II. What are they and why is everybody so interested in them now?," in IEEE Expert, vol. 3, no. 1, pp. 10-15, Spring 1988. DOI: 10.1109/64.2091

Ronan Collobert, Samy Bengio. Links between perceptrons, MLPs and SVMs. ICML '04: Proceedings of the twenty-first international conference on Machine learningJuly 2004. DOI: 10.1145/1015330.1015415

Rumelhart, D., Hinton, G. & Williams, R. Learning representations by back-propagating errors. Nature 323, 533-536 (1986). DOI: 10.1038/323533a0

Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015 Jan;61:85-117. doi: 10.1016/j.neunet.2014.09.003. Epub 2014 Oct 13. PMID: 25462637. PMID: 25462637

Fitz H, Chang F. Language ERPs reflect learning through prediction error propagation. Cogn Psychol. 2019 Jun;111:15-52. doi: 10.1016/j.cogpsych.2019.03.002. Epub 2019 Mar 25. PMID: 30921626.PMID: 30921626

G. Guida and G. Mauri, "Evaluation of natural language processing systems: Issues and approaches," in Proceedings of the IEEE, vol. 74, no. 7, pp. 1026-1035, July 1986. DOI: 10.1109/PROC.1986.13580

Yoav Goldberg. A Primer on Neural Network Models for Natural Language Processing. JAIR Vol. 57 (2016). DOI: 10.1613/jair.4992

Juri Yanase, Evangelos Triantaphyllou. A systematic survey of computer-aided diagnosis in medicine: Past and present developments. Expert Systems with Applications Volume 138, 30 December 2019, 112821. DOI: 10.1016/j.eswa.2019.112821

LEDLEY RS, LUSTED LB. Reasoning foundations of medical diagnosis; symbolic logic, probability, and value theory aid our understanding of how physicians reason. Science. 1959 Jul 3;130(3366):9-21. doi: 10.1126/science.130.3366.9. PMID: 13668531. PMID: 13668531

Bleich HL. Computer-based consultation. Electrolyte and acid-base disorders. Am J Med. 1972 Sep;53(3):285-91. doi: 10.1016/0002-9343(72)90170-2. PMID: 4559984

Gorry GA, Kassirer JP, Essig A, Schwartz WB. Decision analysis as the basis for computer-aided management of acute renal failure. Am J Med. 1973 Oct;55(3):473-84. doi: 10.1016/0002-9343(73)90204-0. PMID: 4582702

Edward H Shortliffe, Bruce G Buchanan. A model of inexact reasoning in medicine. Mathematical Biosciences, Volume 23, Issues 3-4, April 1975, Pages 351-379. DOI: 10.1016/0025-5564(75)90047-4

Littman, M. L. (1996). Algorithms for sequential decision-making. Brown University ProQuest Dissertations Publishing, 1996. PDF

Woolery LK, Grzymala-Busse J. Machine learning for an expert system to predict preterm birth risk. J Am Med Inform Assoc. 1994 Nov-Dec;1(6):439-46. doi: 10.1136/jamia.1994.95153433. PMID: 7850569; PMCID: PMC116227

Haddawy P, Suebnukarn S. Intelligent clinical training systems. Methods Inf Med. 2010;49(4):388-9. PMID: 20686730. PMID: 20686730

William Mettrey. An Assessment of Tools for Building Large Knowledge-Based Systems. AI MAGAZINE. PDF

Chung, J., Gulcehre, C., Cho, K. & Bengio, Y.. (2015). Gated Feedback Recurrent Neural Networks. Proceedings of the 32nd International Conference on Machine Learning, in Proceedings of Machine Learning Research 37:2067-2075. Available from https://proceedings.mlr.press/v37/chung15.html

Romem Yoram. In content section. Select this link to jump to navigation CiteThe social construction of Expert Systems. Human Systems Management, vol. 26, no. 4, pp. 291-309, 2007. DOI: 10.3233/HSM-2007-26406